Understanding NeRF: The Neural Radiance Fields

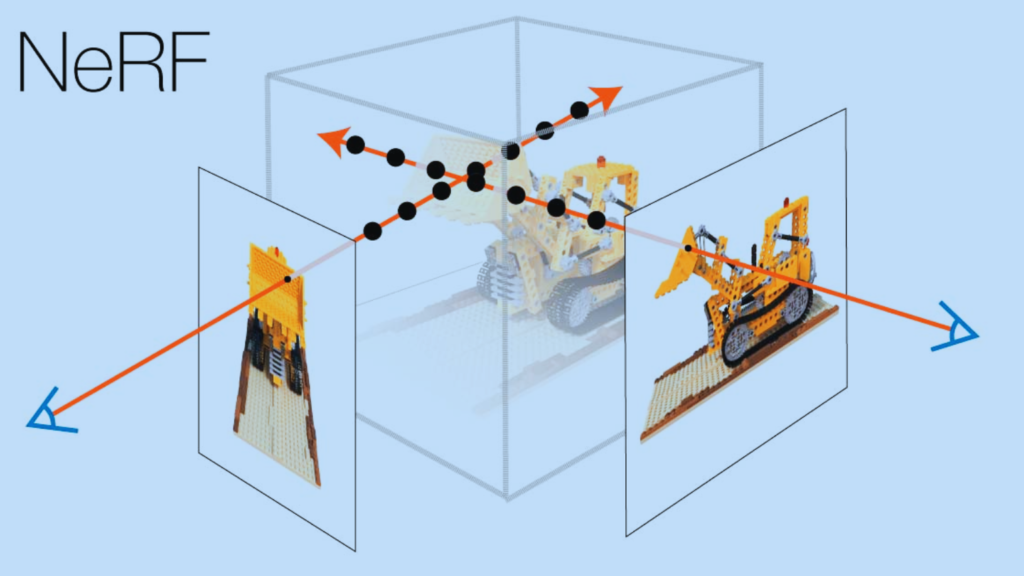

NeRF is an acronym for Neural Radiance Fields, an AI-based approach to 3D image rendering. The crux of this model lies in its ability to use a neural network to learn. It can learn a continuous volumetric scene function from a sparse set of 2D images.

Bayesian Network vs Neural Network

This function maps a 3D spatial location (along with a 2D viewing direction) to a color and opacity. This results in highly detailed and photorealistic 3D structures.

The Intriguing Story Behind NeRF

The development of NeRF was a response to the challenges of 3D rendering. The traditional methods, while effective, were computationally intensive. That’s because the resulting images often lacked photorealistic details. These limitations spurred researchers at Google Research to propose a novel approach—NeRF.

The NeRF paper, titled “NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis,” was released in March 2020.

The paper elaborated on how NeRF deploys a fully connected deep network (a Multilayer Perceptron). It discusses how NeRF uses Multilayer Perceptron to model the volumetric scene. It introduced a technique that drastically reduced the computation required for rendering. And thus, it is improving the quality of the 3D structures produced.

Why is NeRF Famous?

NeRF garnered attention in the AI community due to its novel approach to a long-standing challenge. The system’s capability to produce high-quality, detailed 3D models from a small set of 2D images was groundbreaking.

NeRF’s fame can be attributed to several of its attributes.

Firstly, it delivers exceptional detail by modeling fine structures and complex illumination effects such as reflections and shadows. Secondly, NeRF significantly cuts down the computational cost associated with traditional 3D rendering. Lastly, its flexibility enables it to handle various scene types, including indoor and outdoor environments. This feature perfectly works for both artificial and natural structures.

The Global Impact of NeRF: Which Country Made It?

NeRF is universally applauded but its creation can be credited to researchers from Google Research in the United States. Their ground-breaking work has revolutionized 3D image rendering. In doing so, it has inspired researchers worldwide to explore new applications and enhancements to the technology.

The Significance of the NeRF Paper

The NeRF paper is pivotal in the world of AI and computer vision. It is in this document that the method of leveraging neural networks for 3D rendering was first proposed. The paper meticulously presents the innovative process. It starts from the construction of the neural network to the techniques used for sampling and optimizing the model.

The NeRF paper holds a significant place in AI literature due to the radical shift it proposes in the methodology for 3D rendering. The novel approach, combined with the potential for wide-ranging applications, makes the paper a must-read for anyone. It’s groundbreaking for people involved in AI computer vision, and 3D rendering.

Conclusion

The development of Neural Radiance Fields (NeRF) represents a significant step forward in the field of 3D image rendering. Its unique methodology uses AI to transform a small set of 2D images into highly detailed, photorealistic 3D structures. And thus, it’s fundamentally changing the way we approach 3D rendering.

Despite its newness, NeRF continues to make waves in the AI community, inspiring new research. The journey of NeRF, as detailed in the NeRF paper, showcases how innovative thinking can be coupled with AI. It can revolutionize established processes and open new avenues for technological advancement.