Kaggle vs. Google Colab: Choosing the Right Platform

Kaggle and Collab are two popular platforms in the data science community that offer unique features and tools for data scientists and machine learning practitioners. While both platforms have their own strengths and weaknesses, they share a common goal of providing a collaborative environment for users to explore and build models, work with other data scientists, and solve data science challenges. In this article, we will compare and contrast Kaggle vs. Google Colab, highlighting their similarities and differences, and help you decide which platform is best suited for your data science needs. Kaggle Kaggle is a data science platform that offers a variety of features and tools for data scientists and machine learning practitioners. Here is a more detailed breakdown of its properties: Datasets and Competitions Kaggle provides access to a vast collection of datasets and competitions, which allow users to explore and analyze real-world data and compete with other data scientists to solve complex problems. The platform hosts a wide range of competitions, from improving gesture recognition for Microsoft Kinect to improving the search for the Higgs boson at CERN. The competition host prepares the data and a description of the problem, and participants experiment with different techniques and compete against each other to produce the best models. Notebooks and Kernels Kaggle offers a powerful web-based environment for creating and sharing code, called Notebooks and Kernels. This allows users to collaborate with others and build models more efficiently. Notebooks are a web-based interface for writing and running code, while Kernels are a cloud-based environment for running code in a variety of programming languages, including Python, R, and Julia. Kernels can be shared publicly or kept private, and users can collaborate on them in real-time. GPU and TPU Access Kaggle provides access to powerful GPU and TPU hardware, which can significantly speed up the training of machine learning models. This allows users to train models faster and more efficiently, which can be especially useful for large datasets or complex models. Kaggle also provides a variety of tools and resources for optimizing code and taking advantage of the available hardware. Community and Learning Kaggle has a large and active community of data scientists and machine learning practitioners, which provides a wealth of knowledge and resources for learning and improving one’s skills. The platform offers a variety of resources for learning, including courses, tutorials, and blog posts. Kaggle also has a discussion forum where users can ask questions, share ideas, and collaborate with others. Additionally, Kaggle hosts a variety of events, such as hackathons and meetups, which provide opportunities for users to network and learn from each other. Google Colab Google Colab is a cloud-based platform that provides a variety of features and tools for data scientists and machine learning practitioners. Here is a more detailed breakdown of each of the headings: Cloud-Based Jupyter Notebooks Google Colab provides a cloud-based environment for creating and sharing Jupyter notebooks. This allows users to write and run code in a web-based interface, without the need for local installation or setup. Colab notebooks can be shared publicly or kept private, and users can collaborate on them in real-time. Snowflake Vs Oracle – An Overview Free GPU Access Google Colab provides free access to GPU hardware, which can significantly speed up the training of machine learning models. This allows users to train models faster and more efficiently, which can be especially useful for large datasets or complex models. Colab also provides a variety of tools and resources for optimizing code and taking advantage of the available hardware. Integration with Google Services Google Colab is integrated with a variety of Google services, including Google Drive, Google Sheets, and Google Cloud Storage. This allows users to easily import and export data, collaborate with others, and take advantage of other Google services. Preinstalled Libraries and Resource Quotas Google Colab comes with a variety of preinstalled libraries, including popular machine learning libraries such as TensorFlow, PyTorch, and scikit-learn. This allows users to get started quickly and easily, without the need for manual installation or setup. Google Colab provides resource quotas for CPU, GPU, and memory usage, which can limit the amount of resources that a user can consume. This helps to ensure fair usage of resources and prevent abuse of the platform. However, users can request additional resources if needed, subject to approval by Google. Choosing Between Kaggle vs. Google Colab Kaggle and Google Colab tare two cloud-based platforms that provide a variety of features and tools for data scientists and machine learning practitioners. Kaggle offers access to a vast collection of datasets and competitions, while Colab provides a free cloud-based environment for creating and sharing Jupyter notebooks, with free GPU access. The choice between Kaggle and Colab depends on your specific needs and preferences, and both platforms offer a variety of powerful features and tools for data scientists and machine learning practitioners. Conclusion In summary, Kaggle shines for its rich datasets and collaborative competitions, ideal for learning and skill enhancement. Google Colab offers a cloud-based, GPU-accelerated environment suitable for quick experimentation and personal projects. Choose Kaggle for community engagement and deep learning challenges, and opt for Colab’s convenience and free GPU access for efficient model training. Both platforms have distinct advantages, so consider your goals and preferences when making a decision. Demystifying Types of Services in Kubernetes FAQs 1. Is Kaggle a Google product? Kaggle, operated by Google LLC, serves as an online hub where data scientists and machine learning experts convene to participate in competitions and foster a community dedicated to the field of data science. 2. What language does Kaggle use? Given the prevalence of both Python and R in Kaggle and the wider data science realm, if you’re commencing from ground zero, our suggestion is to opt for Python. This recommendation stems from Python being a versatile programming language applicable across the entire spectrum of tasks. 3. Is Google Colab just Python? Google Colab, a product of Google Research, is a freemium platform that permits users

Kaggle vs Huggingface: A Comprehensive Comparison

Hugging Face and Kaggle are both online platforms that provide resources for machine learning and data science. However, they have different strengths and weaknesses. Hugging Face is primarily focused on pre-trained language models and natural language processing (NLP), while Kaggle hosts competitions on a variety of topics, including image classification, natural language processing, and fraud detection. Hugging Face is easier to use than Kaggle, but Kaggle has a larger community. This blog will provide more detailed information about the similarities and differences between the two platforms Kaggle vs Huggingface. Exploring Kaggle A. Platform Purpose and Features Kaggle is a platform that serves as a hub for data science and machine learning practitioners. It offers a variety of features and resources to help users learn, improve their skills, and network with other professionals in the field. Some of the key features of Kaggle include: B. Benefits for Data Scientists Kaggle offers several benefits for data scientists and machine learning practitioners, including: Kaggle vs. Google Colab: Choosing the Right Platform for Data Science and Machine Learning C. Drawbacks and Limitations While Kaggle offers many benefits, there are also some drawbacks and limitations to the platform, including: Understanding Huggingface A. Platform Purpose and Features Hugging Face is an open-source software company that develops tools and resources for natural language processing (NLP). The company’s flagship product is the Transformers library, which provides a unified API for accessing and using pre-trained language models. Some of the key features of Hugging Face include: B. Benefits for Data Scientists Hugging Face offers several benefits for data scientists and NLP practitioners, including: Computer Vision in Production: An Ultimate Guide! C. Drawbacks and Limitations While Hugging Face offers many benefits, there are also some drawbacks and limitations to the platform, including: Comparison of Hugging Face and Kaggle Target Audience Hugging Face and Kaggle have different target audiences. Hugging Face is primarily focused on natural language processing (NLP) practitioners, while Kaggle is more general and caters to a wider range of data science and machine learning practitioners. Hugging Face’s tools and resources are designed specifically for NLP tasks, while Kaggle’s competitions and resources cover a wider range of topics. Learning Curve Hugging Face and Kaggle also have different learning curves. Hugging Face is generally considered to be easier to use than Kaggle, as it is a simpler platform with fewer features. Hugging Face’s Course provides a structured learning path for NLP practitioners, while Kaggle’s resources are more self-directed. However, Kaggle’s competitions can be a great way for users to learn new data science and machine learning techniques. Collaborative Aspect Both Hugging Face and Kaggle offer opportunities for collaboration and knowledge sharing. Hugging Face’s Hub provides a platform for sharing and using pre-trained models, while Kaggle’s Notebooks feature allows users to share their code and collaborate on projects. Both platforms have active communities of users who are willing to help and collaborate with others. Impact on the Data Science Community Both Hugging Face and Kaggle have had a significant impact on the data science community. Hugging Face’s Transformers library has become a popular tool for NLP practitioners, while Kaggle’s competitions have helped to advance the state of the art in data science and machine learning. Both platforms have also contributed to the open-source community, making their tools and resources accessible to a wider range of users. Conclusion Both Hugging Face and Kaggle are valuable platforms for data science and machine learning practitioners, but they have different strengths and weaknesses. Hugging Face is more focused on NLP and is generally considered to be easier to use, while Kaggle is more general and offers a wider range of resources and opportunities for learning and collaboration. Ultimately, the choice between the two platforms will depend on the user’s specific needs and interests. Whether you are interested in NLP or other areas of data science and machine learning, both Hugging Face and Kaggle have something to offer. So why not explore both platforms and see which one is the best fit for you? FAQs Can you use Hugging Face for free? Hugging Face additionally functions as a platform for open source datasets and libraries, facilitating collaborative teamwork through a repository structure akin to GitHub. A wide array of these services can be accessed at no cost, while options for pro and enterprise tiers are also available. How does Hugging Face earn money? Hugging Face’s focus involves crafting a collaborative platform tailored to the machine learning community, which we refer to as the Hub. Our monetization strategy centers on offering convenient AI compute accessibility through features like AutoTrain, Spaces, and Inference Endpoints, seamlessly accessible from the Hub interface. These services are billed by Hugging Face directly to the credit card associated with the account. What Kaggle is used for? Kaggle functions as a comprehensive platform for data science and artificial intelligence endeavors. The platform hosts contests featuring lucrative prizes, sponsored by prominent corporations and organizations. Alongside these competitions, users have the capability to share their own datasets and explore datasets contributed by fellow users. References https://www.kaggle.com/static/slides/meetkaggle.pdf

Computer Vision in Production: An Ultimate Guide!

Computer vision is a rapidly growing field of artificial intelligence that has the potential to revolutionize the manufacturing industry. By using cameras and sensors to capture and analyze visual data, Computer Vision in Production manufacturing, and some of the challenges that must be addressed to fully realize its potential. Stages of Software Development: A Comprehensive Guide What is Computer Vision in Production? Computer vision is a subfield of artificial intelligence that focuses on enabling machines to interpret and understand visual data from the world around them. This can include images, videos, and other types of visual information. By using algorithms and machine learning techniques, computer vision systems can analyze and interpret this data to identify patterns, objects, and other features of interest. How it Helps in Production? Computer vision has a wide range of potential applications in the manufacturing industry. Here are some of the key ways that this technology can help improve production processes: 1: Quality Control: Computer vision systems can be used to automatically inspect products for defects, ensuring that only high-quality items are shipped to customers. 2: Process Optimization: By analyzing visual data from production lines, computer vision systems can identify areas where processes can be optimized to improve efficiency and reduce waste. 3: Predictive Maintenance: Computer vision systems can be used to monitor equipment and identify potential issues before they cause downtime or other problems. 4: Safety: Computer vision systems can be used to monitor workers and identify potential safety hazards, helping to prevent accidents and injuries. The Future of Computer Vision in Production As computer vision technology continues to evolve, its potential applications in the manufacturing industry will only continue to grow. Here are some of the key trends that are likely to shape the future of computer vision in production: 1: Increased Automation: As computer vision systems become more advanced, they will be able to take on increasingly complex tasks, enabling greater levels of automation in manufacturing. 2: Real-Time Analytics: By analyzing visual data in real-time, computer vision systems will be able to provide manufacturers with instant insights into their production processes, enabling them to make faster and more informed decisions. 3: Integration with Other Technologies: Computer vision systems will likely be integrated with other technologies such as robotics, IoT sensors, and AI-powered analytics tools to create more comprehensive and effective production systems. Computer Vision in Production – Some Challenges While computer vision has the potential to transform the manufacturing industry, there are also some challenges that must be addressed to fully realize its potential. Here are some of the key challenges that must be addressed: 1: Data Quality: Computer vision systems rely on high-quality data to function effectively. This means that manufacturers must ensure that their cameras and sensors are properly calibrated and that the data they collect is accurate and reliable. 2: Cost: Implementing computer vision systems can be expensive, particularly for smaller manufacturers. This means that there may be a significant upfront investment required to adopt this technology. 3: Privacy and Security: As computer vision systems collect and analyze large amounts of visual data, there are concerns around privacy and security. Manufacturers must ensure that they have appropriate measures in place to protect sensitive data and prevent unauthorized access. 4: Integration with Existing Systems: Integrating computer vision systems with existing production systems can be challenging, particularly if those systems are outdated or not designed to work with this technology.Continuous Delivery vs Deployment: Which is Best for Your Software Development Process? How Does Computer Vision Technology Compare to Traditional Manufacturing? Computer vision technology has the potential to be more cost-effective and effective than traditional manufacturing methods in certain applications. While there may be an upfront investment required to implement computer vision systems, these systems can help reduce costs and improve efficiency in the long run. For example, computer vision systems can help automate quality control processes, reducing the need for manual inspections and potentially reducing the number of defective products that are produced. This can help save time and money while also improving product quality. In terms of effectiveness, computer vision systems can be more accurate and consistent than human workers in certain applications. For example, computer vision systems can be used to inspect products for defects at a much faster rate than human workers, and they can do so with a higher level of accuracy. Additionally, computer vision systems can be used to monitor production processes in real-time, enabling manufacturers to identify and address issues as they arise. This can help improve overall efficiency and reduce waste. Final Thoughts! Computer vision has the potential to transform the manufacturing industry by enabling greater levels of automation, improving quality control, and enhancing safety. While there are certainly challenges that must be addressed to fully realize its potential, the benefits of this technology are clear. As computer vision systems continue to evolve and become more advanced, we can expect to see even more innovative applications of this technology in the years to come. By staying up-to-date with the latest developments in computer vision and working to overcome the challenges associated with this technology, manufacturers can position themselves for success in the future.

Kaggle vs DataCamp for Data Science Learning: A Comprehensive Analysis

Welcome to our comprehensive analysis of Kaggle and DataCamp for data science learning. In this guide, we will provide an in-depth comparison of these two popular online learning platforms, exploring their strengths, weaknesses, and suitability for different types of learners. Whether you’re an aspiring data scientist or a seasoned practitioner looking to expand your skills, this guide will help you make an informed decision on which platform to choose. So let’s dive in and explore the world of data science education with Kaggle vs DataCamp! Kaggle: Unleashing the Power of Competitions and Collaboration Core Features: Advantages of using Kaggle for learning data science: DataCamp: Interactive Learning and Skill Building problem Overview of DataCamp’s Key Features: Interactive Coding Exercises: DataCamp provides interactive coding exercises that allow learners to practice their skills and get immediate feedback. These exercises cover a wide range of topics and technologies, from data manipulation and visualization to machine learning and deep learning. Advantages of using DataCamp for learning data science: Which Platform Should You Choose? When deciding between Kaggle and DataCamp, there are several factors to consider: To leverage both platforms for a comprehensive learning experience, consider using Kaggle for real-world problem-solving and exposure to diverse datasets, and DataCamp for structured learning paths and practical coding skills development. You can also use Kaggle to apply the skills you learn on DataCamp to real-world problems and showcase your projects on Kaggle’s platform. Conclusion In conclusion, both Kaggle and DataCamp are powerful platforms for online data science education. Kaggle’s strengths lie in its real-world problem-solving approach, exposure to diverse datasets, and collaborative learning environment. DataCamp’s strengths lie in its structured learning paths, practical coding skills development, and focus on specific skills and technologies. We encourage learners to explore both platforms based on their individual needs and goals. Depending on your learning preferences, prior knowledge and experience, desired level of engagement, and budget constraints, one platform may be a better fit than the other. However, leveraging both platforms can provide a comprehensive learning experience and help learners develop a well-rounded skill set. FAQs Is Kaggle the best way to learn data science? Kaggle offers valuable resources and a thriving community that cater to data scientists at every proficiency level. Regardless of whether you’re just starting out and eager to acquire new skills while actively participating in projects, an experienced data scientist seeking competitive challenges, or somewhere in the middle, Kaggle provides a beneficial platform to explore. Does Google hire from Kaggle? Illustrating this point is the success story of Gilberto Titeric, who achieved the top-ranking position on Kaggle in 2015. His remarkable accomplishment not only led him to secure a position at Airbnb but also garnered job offers from renowned companies like Tesla and Google. Is DataCamp worth it for data science? Whether you’re aiming to enhance your skills, delve into programming languages, or deepen your understanding of data science and data engineering, investing in DataCamp courses holds considerable value. However, if your primary objective is to establish a career path or seek job opportunities, it’s essential to note that DataCamp lacks formal credentials to validate your learning. References https://www.kaggle.com/static/slides/meetkaggle.pdf https://www.datacamp.com/resources/ebooks

Apigee vs AWS API Gateway: A Comprehensive Comparison

Apigee API Management and Amazon API Gateway are two of the most popular API management solutions available today. Both services provide essential features for distributing APIs, but their approaches differ significantly from each other. In this document, we will compare Apigee and Amazon API Gateway in detail, covering factors such as functionality, flexibility, ease of use, and performance. By the end of this document, you will have a better understanding of the differences between these two services and be able to make an informed decision on which one is best suited for your specific use case. Apigee: Features, Benefits, and Use Cases Overview of Apigee API management platform: Apigee API Management is a cloud-based platform that provides end-to-end API management capabilities. It allows developers to create, publish, secure, and analyze APIs at scale. The platform offers a range of features and functionalities, including API creation and design tools, analytics and monitoring, security and authentication options, and a developer portal and documentation. Apigee API Management is highly scalable and can handle millions of API requests per day. Key features and functionalities: Apigee API Management offers a range of features and functionalities that make it easy for developers to manage APIs. The API creation and design tools allow developers to create and manage APIs using a range of protocols and formats. The analytics and monitoring features provide real-time insights into API performance and usage. The security and authentication options include OAuth 2.0 support, Cloud Armor, and CMEK. The developer portal and documentation provide a solid starting point for managing API documentation, community forums, and other resources for developers. Benefits of using Apigee: There are several benefits of using Apigee API Management, including the ability to create, publish, secure, and analyze APIs at scale. The platform offers a range of features and functionalities that make it easy for developers to manage APIs, including API creation and design tools, analytics and monitoring, security and authentication options, and a developer portal and documentation. Additionally, Apigee API Management is highly scalable and can handle millions of API requests per day. It also offers flexible pricing plans to meet the needs of organizations of all sizes. Use cases showcasing successful implementations: Apigee API Management has been successfully implemented by a range of organizations, including large enterprises and those with scalability and performance requirements. For example, a large financial services company used Apigee API Management to create a unified API platform that enabled them to securely expose their services to external partners and developers. Another use case involved a large retailer that used Apigee API Management to manage their APIs and provide a seamless customer experience across multiple channels. Apigee API Management is also ideal for organizations with scalability and performance requirements, as it can handle millions of API requests per day and offers flexible deployment options. AWS API Gateway: Features, Benefits, and Use Cases Overview of AWS API Gateway service: AWS API Gateway is a fully managed service that makes it easy for developers to create, deploy, and manage APIs at any scale. The service allows developers to create RESTful APIs or WebSocket APIs with real-time updates that enable communication between applications. AWS API Gateway also provides a range of features and functionalities, including API deployment and management, integration with AWS services, security and authorization mechanisms, and caching and performance optimization. Key features and functionalities: AWS API Gateway provides a range of features and functionalities that make it easy for developers to manage APIs. The API deployment and management features allow developers to create and manage APIs using a range of protocols and formats. The integration with AWS services allows developers to easily connect their APIs to other AWS services, such as AWS Lambda, Amazon S3, and Amazon DynamoDB. The security and authorization mechanisms include support for OAuth 2.0, AWS Identity and Access Management (IAM), and Amazon Cognito. The caching and performance optimization features allow developers to improve the performance of their APIs by caching responses and reducing the number of requests to backend services. Benefits of leveraging AWS API Gateway: There are several benefits of leveraging AWS API Gateway, including the ability to create, deploy, and manage APIs at any scale. The service offers a range of features and functionalities that make it easy for developers to manage APIs, including API deployment and management, integration with AWS services, security and authorization mechanisms, and caching and performance optimization. Additionally, AWS API Gateway is highly scalable and can handle millions of API requests per day. It also offers flexible pricing plans to meet the needs of organizations of all sizes. Use cases illustrating effective utilization: AWS API Gateway has been effectively utilized by a range of organizations, including those with serverless architectures and those that integrate with AWS Lambda functions. For example, a large media company used AWS API Gateway to create a serverless architecture that allowed them to easily manage their APIs and scale their infrastructure as needed. Another use case involved a large e-commerce company that used AWS API Gateway to integrate their APIs with AWS Lambda functions, allowing them to easily manage their APIs and reduce the number of requests to backend services. AWS API Gateway is also ideal for organizations that require high scalability and performance, as it can handle millions of API requests per day and offers flexible deployment options. Comparison: Apigee vs. AWS API Gateway A. Pricing models and cost considerations: When comparing Apigee and AWS API Gateway, one crucial aspect to assess is their pricing models and associated cost considerations. Apigee offers tiered pricing plans based on the scale of API usage and specific features required. This allows organizations to choose a plan that aligns with their budget and growth expectations. On the other hand, AWS API Gateway follows a pay-as-you-go model, charging based on the number of API calls and data transferred. Evaluating the projected usage and understanding the potential cost implications of each platform is essential in making an informed decision. B. Ease of use and learning curve:

Object Recognition Software Open Source: A Comprehensive Guide

Object recognition software Open Source technology that enables machines to identify and classify objects within digital images or videos. This technology has numerous applications, including surveillance, robotics, autonomous vehicles, and augmented reality. While there are many commercial object recognition software solutions available, open source software has become increasingly popular due to its flexibility, affordability, and community support. In this article, we will explore the world of open source object recognition software, its benefits, and some of the most popular options available.Application Monitoring Best Practices: A Comprehensive Guide What is Object Recognition Software Open Source? Open source software is software that is freely available to use, modify, and distribute. The source code for the software is made available to the public, allowing developers to modify and improve the software as needed. Open source software is often developed by a community of volunteers who collaborate to create and maintain the software. This community-driven approach has led to the development of many high-quality software solutions that are available for free. Benefits of Open Source Object Recognition Software There are several benefits to using open source object recognition software, including: 1: Cost: Open source software is often available for free, making it an affordable option for individuals and organizations with limited budgets. 2. Flexibility: Open source software can be customized and modified to meet specific needs, making it a flexible option for a wide range of applications. 3. Community Support: Open source software is often developed and maintained by a community of volunteers who provide support and assistance to users. 4. Transparency: The source code for open source software is available to the public, making it transparent and allowing users to verify the security and functionality of the software. Popular Open Source Object Recognition Software 1: OpenCV: OpenCV is a popular open source computer vision library that includes a wide range of algorithms for object recognition, image processing, and machine learning. It is written in C++ and has bindings for Python, Java, and MATLAB. 2. TensorFlow: TensorFlow is an open source machine learning library developed by Google. It includes a wide range of tools and algorithms for machine learning, including object recognition. 3. YOLO: YOLO (You Only Look Once) is an open source real-time object detection system that is fast and accurate. It is written in C and CUDA and has bindings for Python and MATLAB. 4. Darknet: Darknet is an open source neural network framework that includes a wide range of tools and algorithms for object recognition, image processing, and machine learning. It is written in C and CUDA and has bindings for Python and MATLAB.Version Control Systems: An Overview of Centralized and Distributed Approaches 5. Caffe: Caffe is an open source deep learning framework that includes a wide range of tools and algorithms for object recognition, image processing, and machine learning. It is written in C++ and has bindings for Python and MATLAB. 6. Torch: Torch is an open source machine learning library that includes a wide range of tools and algorithms for object recognition, image processing, and machine learning. It is written in Lua and has bindings for Python and MATLAB. 7. MXNet: MXNet is an open source deep learning framework that includes a wide range of tools and algorithms for object recognition, image processing, and machine learning. It is written in C++ and has bindings for Python, Java, and MATLAB. Choosing the Right Open Source Object Recognition Software When choosing an open source object recognition software, it is important to consider several factors, including: 1: Compatibility: Make sure the software is compatible with your hardware and operating system. 2. Ease of Use: Choose software that is easy to install, configure, and use. 3. Performance: Look for software that is fast and accurate, and can handle large datasets. 4. Community Support: Choose software that has an active community of developers and users who can provide support and assistance. 5. Documentation: Look for software that has clear and comprehensive documentation, including tutorials and examples. Conclusion Open source object recognition software has become an increasingly popular option for individuals and organizations looking for affordable, flexible, and community-driven solutions. There are many high-quality open source object recognition software options available, including OpenCV, TensorFlow, YOLO, Darknet, Caffe, Torch, and MXNet. When choosing an open source object recognition software, it is important to consider factors such as compatibility, ease of use, performance, community support, and documentation. With the right open source object recognition software, you can unlock the full potential of computer vision technology and take your applications to the next level.

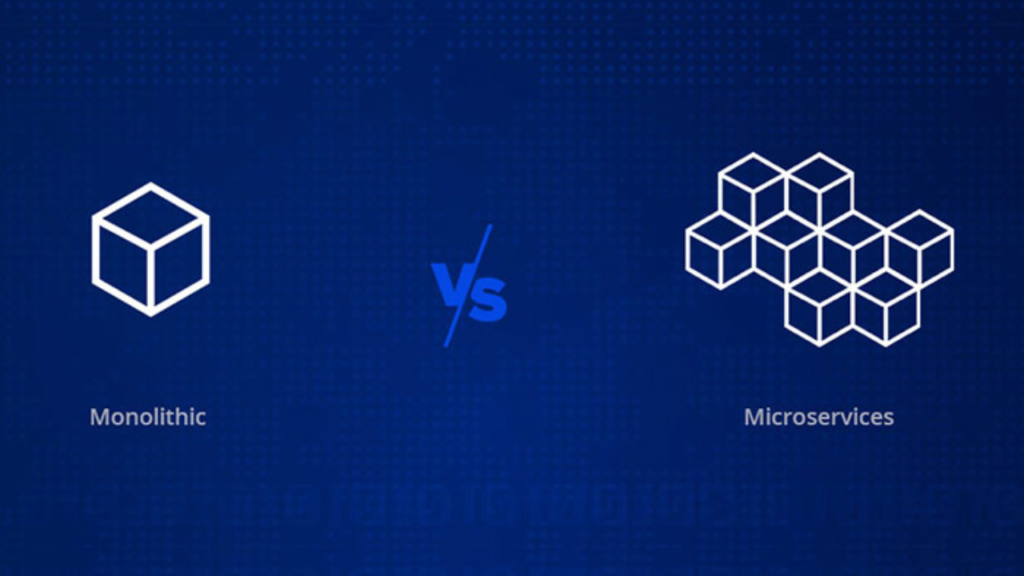

Monolithic vs Microservices Architecture: Choosing the Right Path for Your Software

In the world of software development, architectural decisions play a crucial role in shaping an application’s performance, scalability, and maintainability. Choosing the right architecture can make the difference between a successful application and a failed one. Two of the most popular architectural paradigms are Monolithic vs Microservices Architecture. In this article, we will explore the characteristics, advantages, and disadvantages of each architecture and provide guidance on how to choose the right path for your software.What is Scale AI? What is Monolithic. Monolithic architecture is a traditional approach to software development where the entire application is structured as a single, unified unit. All the components of the application are tightly coupled and interdependent. This means that if one component fails, the entire application is affected. Monolithic applications are usually built using a single programming language and a single database. What is Microservices Architecture. One of the main advantages of Monolithic architecture is its simplicity in development. Since the entire application is built as a single unit, it is easier to develop and deploy. Testing and debugging are also easier since all the components are in one place. Additionally, Monolithic architecture has lower operational overhead since there is only one application to manage. Disadvantages of Monolithic Architecture One of the main disadvantages of Monolithic architecture is its limited scalability. Since all the components are tightly coupled, it is difficult to scale individual components independently. Dependency issues can also arise since all the components are interdependent. This can lead to increased risk of downtime since a failure in one component can bring down the entire application. What is Microservices Architecture? Microservices architecture is a modern approach to software development where the application is structured as a collection of independent services. Each service is responsible for a specific function and communicates with other services through APIs. Microservices applications are usually built using multiple programming languages and databases. Advantages of Microservices Architecture One of the main advantages of Microservices architecture is its scalability. Since each service is independent, it can be scaled independently. This means that the application can handle a high volume of requests without affecting the performance of other services. Microservices architecture also provides flexibility since each service can be developed and deployed independently. This allows for faster development and deployment cycles. Additionally, Microservices architecture improves resilience since a failure in one service does not affect the entire application. Disadvantages of Microservices Architecture One of the main disadvantages of Microservices architecture is its increased complexity. Since the application is composed of multiple services, it can be difficult to manage and maintain. Integration and testing can also be challenging since each service needs to be tested individually and then integrated with other services. Additionally, Microservices architecture can have higher development costs since each service needs to be developed and deployed independently. Real-World Examples of Companies That Have Successfully Implemented Microservices Or Monolithic Architectures There are several real-world examples of companies that have successfully implemented both Monolithic and Microservices architectures. 1: Netflix: One example of a company that has successfully implemented Monolithic architecture is Netflix. Netflix started as a DVD rental service but has since evolved into a streaming service with millions of subscribers worldwide. Netflix’s Monolithic architecture allows for easy development and deployment of new features, which has contributed to its success. 2: Amazon: Another example of a company that has successfully implemented Microservices architecture is Amazon. Amazon’s e-commerce platform is composed of multiple services, each responsible for a specific function, such as product search, checkout, and payment processing. Amazon’s Microservices architecture allows for scalability and flexibility, which has enabled the company to handle a high volume of requests and provide a seamless shopping experience for its customers. 3: Other Examples Other examples of companies that have successfully implemented Microservices architecture include Uber, Airbnb, and Spotify. These companies have leveraged Microservices architecture to provide scalable and flexible services to their customers. In conclusion, both Monolithic and Microservices architectures have been successfully implemented by various companies. The choice of architecture depends on several factors, including the size and complexity of the application, scalability requirements, and the development team’s expertise.Application Monitoring Best Practices: A Comprehensive Guide Monolithic Vs Microservices Architecture – Choosing the Right Architecture When choosing between Monolithic and Microservices architecture, there are several factors to consider. One factor is the size and complexity of the application. Monolithic architecture may be a better choice for smaller applications with limited functionality, while Microservices architecture may be a better choice for larger, more complex applications. Another factor to consider is the scalability requirements of the application. If the application needs to handle a high volume of requests, Microservices architecture may be a better choice. Finally, the development team’s expertise and experience should also be considered. If the team is more familiar with Monolithic architecture, it may be a better choice. Conclusion In conclusion, choosing the right architecture is a critical decision in software development. Monolithic and Microservices architecture are two popular paradigms, each with its own advantages and disadvantages. When choosing between the two, it is important to consider factors such as the size and complexity of the application, scalability requirements, and the development team’s expertise. Making an informed decision can ensure the success of the software application.

Application Of Computer Vision in Agriculture

Today the farming world has changed a lot. Now, technology is helping crops grow better than ever before. In this blog, we’ll explore the amazing ways computer vision, a smart computer skill, is transforming agriculture. No more guesswork for farmers – computer vision helps them see and understand their fields like never before. From spotting pests to measuring plant health, let’s dive into how this tech is turning farms into high-tech hubs! Some Application of Computer Vision in Agriculture 1: Investigating Shape Traits Heritability for Cultivar Descriptions: Shape analysis can be used to study the genetic basis of shape traits in agricultural products. This information can be used to develop new cultivars with desirable shape characteristics. 2: Plant Variety or Cultivar Patents Shape analysis can be used to establish the uniqueness of a new plant variety or cultivar. This information can be used to obtain a patent for the new variety or cultivar. 3: Evaluation of Consumer Decision Performance Shape analysis can be used to study how consumers perceive the shape of agricultural products and how this perception affects their purchasing decisions. This information can be used to develop marketing strategies that take into account consumer preferences for certain shapes. 4: Product Sorting Shape analysis can be used to sort agricultural products based on their shape characteristics. This can be done using machine vision and pattern recognition techniques, which offer many advantages over conventional optical or mechanical sorting devices. 5: Clone Selection Shape analysis can be used to select clones of agricultural products with desirable shape characteristics. This information can be used to develop new cultivars with desirable shape characteristics. In addition to the above applications, shape analysis can also be used for product characterization, taxonomical purposes, and cultivar/stain origin assessment. Computer vision technology plays a crucial role in shaping the analysis of agricultural products. Here are some ways in which computer vision technology aids in shape analysis: How does computer vision technology aid in shaping the analysis of agricultural products? 1: Image Processing Algorithms: Image processing algorithms have been developed to objectively measure the external features of agricultural products. These algorithms can be used to extract shape information from images of agricultural products. 2: Automated Shape Processing System: A new automated shape-processing system has been proposed that could be useful for both scientific and industrial purposes. This system forms the basis of a common language for the scientific community. The system uses machine vision and pattern recognition techniques to sort agricultural products based on their shape characteristics. 3: Multivariate Statistics: Multivariate statistics can be used to analyze the shape data obtained from agricultural products. This can help identify patterns and relationships between different shape characteristics. 4. Operative Matlab Codes: Operative Matlab codes for shape analysis are available, which can be used to analyze the shape of agricultural products. These codes can be used to extract shape information from images of agricultural products and perform statistical analysis on the shape data. How to Mitigate DDoS Attacks: A Comprehensive Guide 5. Non-Destructive Testing: Computer vision technology can be used for non-destructive testing of agricultural products. This means that the shape of the product can be analyzed without damaging it. This is particularly useful for products that are delicate or have a short shelf life. So, computer vision technology aids in shape analysis of agricultural products by providing image processing algorithms, an automated shape processing system, multivariate statistics, operative Matlab codes, and non-destructive testing. While shape analysis has many potential applications in the agricultural industry, there are also several limitations and challenges to implementing this technology. Here are five such limitations or challenges: Are there any Limitations or Challenges? While shape analysis has many potential applications in the agricultural industry, there are also several limitations and challenges to implementing this technology. Here are five such limitations or challenges: 1: Variability in Shape: Agricultural products can have a high degree of variability in shape, even within the same cultivar. This variability can make it difficult to develop algorithms that accurately measure shape characteristics. 2. Image Acquisition: Obtaining high-quality images of agricultural products can be challenging. Factors such as lighting, camera angle, and background can all affect the quality of the image. This can lead to errors in shape analysis. 3. Processing Time: Shape analysis can be computationally intensive, particularly when analyzing large datasets. This can lead to long processing times, which can be a barrier to implementing this technology in the agricultural industry. 4. Cost: Implementing shape analysis technology can be expensive, particularly for small-scale farmers. The cost of equipment, software, and training can be a barrier to adoption. 5. Lack Of Standardization: There is currently a lack of standardization in shape analysis methods for agricultural products. This can make it difficult to compare results between studies and can limit the usefulness of this technology. Datacamp vs Codecademy: Which Platform is Right for You? Final Thoughts! In summary, the limitations and challenges to implementing shape analysis in the agricultural industry include variability in shape, image acquisition, processing time, cost, and lack of standardization. Addressing these challenges will be important for the widespread adoption of shape analysis technology in the agricultural industry.

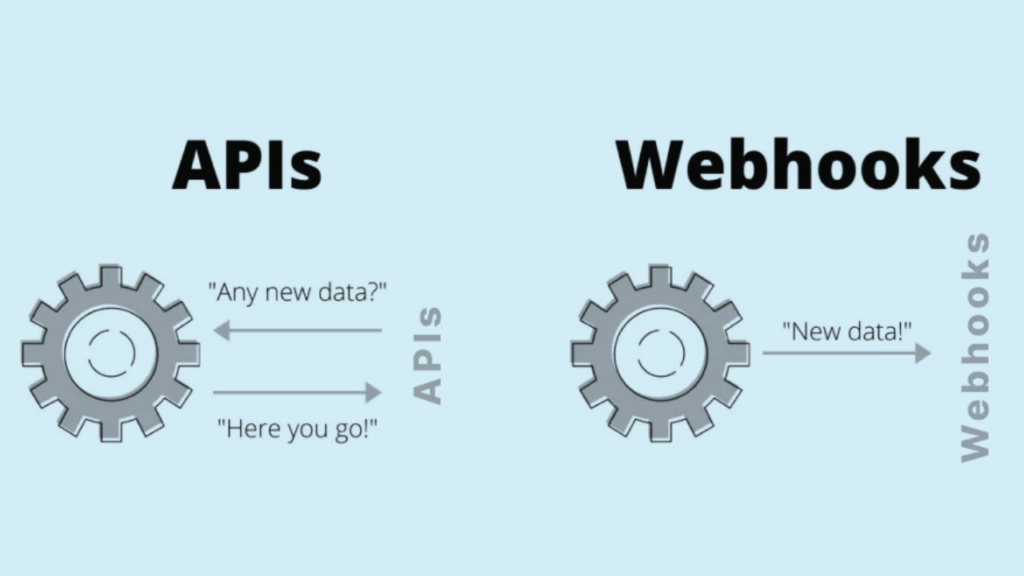

Webhooks vs APIs: A Comparative Exploration in 2023

In the ever-evolving world of technology, staying informed about the tools and techniques available is essential for developers and businesses alike. Two fundamental elements of modern software development are APIs (Application Programming Interfaces) and Webhooks. They are the silent heroes behind data exchange, powering countless applications and services. In this article, we’ll delve into the world of Webhooks vs APIs, comparing them, exploring their similarities, and discussing when and why you might prefer one over the other. Let’s embark on this journey of discovery.DevOps vs Site Reliability Engineer: Understanding the Differences What Is APIs? APIs, or Application Programming Interfaces, serve as intermediaries between different software systems. They define the rules and protocols for how these systems should interact. APIs enable developers to access the functionalities and data of other applications, making them a cornerstone of modern software development. Examples of APIs (Application Programming Interfaces): 1: Social Media APIs: Twitter API: Developers can use the Twitter API to integrate tweets, user profiles, and engagement metrics into their applications. This enables features like embedding tweets, scheduling posts, or analyzing trends. 2: Facebook Graph API: This API allows developers to access and interact with Facebook’s social graph, enabling features such as sharing content, fetching user data, and posting updates. 3: Payment Gateway APIs: PayPal API: PayPal provides APIs for processing payments, managing transactions, and handling refunds. E-commerce platforms and businesses use these APIs to facilitate online payments securely. 4: Stripe API: Stripe’s API is popular for its ease of integration. It enables businesses to accept payments, set up subscription services, and manage customer information. 5: Maps and Location APIs: Google Maps API: Developers can embed interactive maps into websites or mobile apps using the Google Maps API. It offers features like geolocation, route planning, and place search. What Are Webhooks? Webhooks, on the other hand, offer a different mechanism for data exchange. They allow real-time communication between applications by sending HTTP POST requests with data payloads to predefined URLs when specific events occur. In essence, Webhooks are a way for applications to notify each other about events. Examples of Webhooks: 1: Chat Applications: Slack Webhooks: Slack allows developers to set up Webhooks for receiving notifications about new messages, mentions, or other events in channels. This facilitates real-time integration with other applications and services. 2: E-commerce Platforms: Shopify Webhooks: Shopify offers Webhooks to notify online store owners about various events, such as order creation, product updates, or inventory changes. This enables efficient inventory management and order fulfillment.Stages of Software Development: A Comprehensive Guide 3: Content Management Systems (CMS): WordPress Webhooks: WordPress plugins can use Webhooks to trigger actions, such as publishing new blog posts or updating content, in response to specific events. 4: IoT Devices: Smart Home Devices: IoT devices often use Webhooks to communicate with each other or with cloud services. For example, a smart doorbell may trigger a Webhook to notify a homeowner when someone is at the door. 5: Version Control Systems: GitHub Webhooks: Developers use GitHub Webhooks to receive real-time updates about events like code commits, pull requests, or issues. This streamlines collaboration and automation in software development. Webhooks Vs APIs – Some Similarities 1. Data Exchange Both Webhooks and APIs facilitate data exchange. APIs offer structured endpoints for fetching or manipulating data, while Webhooks push data to specified endpoints when events happen. 2. Automation Both mechanisms enable automation. APIs allow you to programmatically retrieve or update information, while Webhooks automate the notification of events, reducing the need for constant polling. 3. Scalability Webhooks and APIs both scale with your application. As your application grows, you can expand your use of APIs or add more Webhooks as necessary. When To Use Webhooks? Webhooks shine in scenarios where real-time updates are crucial. For example: Chat Applications: Instant message delivery relies on Webhooks to notify recipients. E-commerce: Updates on order status or stock availability benefit from Webhooks to keep customers informed. IoT (Internet of Things): Webhooks ensure immediate responses to sensor-triggered events. When To Use APIs? APIs are ideal for scenarios where controlled data access and more extensive interactions are required. Consider these situations: Social Media Integration: APIs offer full access to social media platforms for posting, fetching data, and engaging with users. Payment Processing: APIs handle complex transactions securely, enabling seamless online purchases. Data Analytics: APIs provide structured access to data for in-depth analysis. Webhooks Vs APIs – What About The Future? The future holds promise for both Webhooks and APIs. Webhooks will continue to play a crucial role in real-time communication, especially in IoT, instant messaging, and event-driven applications. APIs will evolve to become more efficient, secure, and user-friendly, accommodating the growing demand for data integration. Final Thoughts In the grand tapestry of software development, APIs and Webhooks are threads of connectivity that bind applications, services, and data together. Choosing between them depends on your specific needs and use cases. Embrace the power of APIs when you require structured data access and interaction, and leverage Webhooks for instant event notifications and real-time updates. Both are indispensable tools in the modern developer’s toolkit, ensuring the seamless flow of data in an interconnected world. So, as you embark on your next development journey, remember that APIs and Webhooks are your allies, ready to bridge the gaps and create connections that bring your projects to life.

Diffusion Model Image Generation: A Comprehensive Survey

Generative AI has been a rapidly growing field in recent years, with text-to-image synthesis being one of the most exciting and promising areas of research. In this article, we review the latest developments in diffusion model image generation, which have emerged as a popular approach for a wide range of generative tasks. What Is a Diffusion Model? How Does It Work for Image Synthesis Diffusion models (DMs) are a family of generated models that are Markov chains trained with variational inference. The learning goal of DM is to reserve a process of perturbing the data with noise, i.e. diffusion, for sample generation. As a milestone work, the denoising diffusion probabilistic model (DDPM) was published in 2020 and sparked an exponentially increasing interest in the community of generative models afterward. The PDF provides a self-contained introduction to DDPM by covering the most related progress before DDPM and how unconditional DDPM works with image synthesis as a concrete example. Moreover, the PDF summarizes how guidance helps in conditional DM, which is an important foundation for understanding text-conditional DM for text-to-image. What Diffusion Model Image Generation Is All About? Diffusion model image generation is a process of perturbing the data with noise, i.e. diffusion, for sample generation. According to 2, diffusion models (DMs) are a family of generated models that are Markov chains trained with variational inference. The learning goal of DM is to reserve a process of perturbing the data with noise, i.e. diffusion, for sample generation. The denoising diffusion probabilistic model (DDPM) is a type of diffusion model that generates images from noise within finite transitions during inference. DDPMs are defined as a parameterized Markov chain. The PDF provides a self-contained introduction to DDPM by covering the most related progress before DDPM and how unconditional DDPM works with image synthesis as a concrete example. Text-Conditioned Models Improve Learning in Image Generation Text-conditioned models improve learning in image generation by providing guidance to the generative model. Specifically, text-to-image generation is a task where a generative model is conditioned on a text description to generate a corresponding image. The text description provides guidance to the generative model, which helps it to generate more realistic and accurate images. Text-conditioned models use diffusion models (DMs), which are a family of generated models that are Markov chains trained with variational inference. The learning goal of DM is to reserve a process of perturbing the data with noise, i.e. diffusion, for sample generation. Text-conditioned DMs use the text description as a condition to guide the diffusion process, which improves the quality of the generated images. Existing Challenges and Promising Future Directions in Text-To-Image Generation Certainly! Here are the subheadings for the existing challenges and promising future directions in text-to-image generation: Existing Challenges 1: Limited Dataset Availability: One of the main challenges in text-to-image generation is the limited availability of large-scale datasets that contain both text descriptions and corresponding images. Datacamp vs Codecademy: Which Platform is Right for You? 2: Ambiguity in Textual Descriptions: Textual descriptions can be ambiguous and subjective, which makes it difficult for generative models to accurately interpret and generate corresponding images. 3: Complex Scene Understanding: Generating realistic images from textual descriptions requires a deep understanding of complex scenes, including object relationships, spatial layouts, and lighting conditions. Promising Future Directions 1: Multi-Modal Learning: Multi-modal learning is a promising direction for improving text-to-image generation. This approach involves training generative models on multiple modalities, such as text, images, and audio, to improve their ability to generate realistic images from textual descriptions. 2: Attention Mechanisms: Attention mechanisms can help generative models focus on specific parts of the text description that are most relevant to generating a corresponding image. This approach can improve the accuracy and realism of the generated images. Kaggle vs Jupyter: Which One Suits Your Data Science Needs 3: Incorporating External Knowledge: Incorporating external knowledge, such as object recognition and scene understanding, can help generative models better understand and interpret textual descriptions, leading to more accurate and realistic image generation. Overall, while there are still challenges to overcome in text-to-image generation, promising future directions such as multi-modal learning, attention mechanisms, and incorporating external knowledge offer exciting opportunities for improving the accuracy and realism of generated images. Final Thoughts! In conclusion, text-to-image generation is a fascinating and challenging task in the field of generative AI. While there are still challenges to overcome, such as limited dataset availability and ambiguity in textual descriptions, promising future directions such as multi-modal learning, attention mechanisms, and incorporating external knowledge offer exciting opportunities for improving the accuracy and realism of generated images. As we continue to make progress in this field, we may one day be able to create generative models that can generate visually realistic images from textual descriptions with human-like accuracy and creativity. The possibilities are endless, and I am excited to see what the future holds for text-to-image generation and generative AI as a whole.