Overfitting and underfitting are the twin hurdles that every Data Scientist, rookie or seasoned, grapples with. While overfitting tempts with its flawless performance on training data only to falter in real-world applications, underfitting reveals a model’s lackluster grasp of the data’s essence. Achieving the golden mean between these two states is where the art of model crafting truly lies.

This blog post discusses the intricacies that distinguish overfitting from underfitting. Let’s demystify these model conundrums and equipping ourselves to navigate them adeptly.

Overfitting in Machine Learning: An Illusion of Accuracy

Overfitting occurs when an ML model learns the training data too well. It happens when a machine learning model captures all its intricacies, noises, and even outliers. The result is an exceedingly complex model that performs exceptionally well on the training data. But there’s a huge downfall, such a model fails miserably when it encounters new, unseen data.

What is an example of an Overfitting Model?

Consider an example where you are training a model to predict house prices. It’s predicting prices based on features such as area, number of rooms, location, etc. An overfit model in this scenario would learn the training data to such an extent that it might start considering irrelevant or random features.

If it’s considering features like the house number and the color of the exterior, it’s of no use. Considering the importance and thinking that they may influence the price is not good.

Such a model may seem incredibly accurate with the training data. But when it’s used to predict the prices of new houses, its performance will likely be poor. This is because the irrelevant features it relied upon do not actually contribute to the price of a house.

Underfitting in Machine Learning: Oversimplification

Underfitting is almost the opposite of overfitting. It occurs when an ML model is too simple to capture the underlying patterns in the data. An underfit model lacks the complexity needed to learn from the training data. Hence, it fails to capture the relationship between the features and the target variable adequately.

Since the model is failing to consider all the factors, it becomes useless.

What is an example of an Underfitting Model?

Let’s revisit the house pricing example. If the model is underfitting, it might ignore significant factors. These include the area or location, and it may predict house prices solely based on the number of rooms. Such a simplistic model would perform poorly on both the training and testing data. And the main reason is that it fails to capture the complexity of real-world pricing factors.

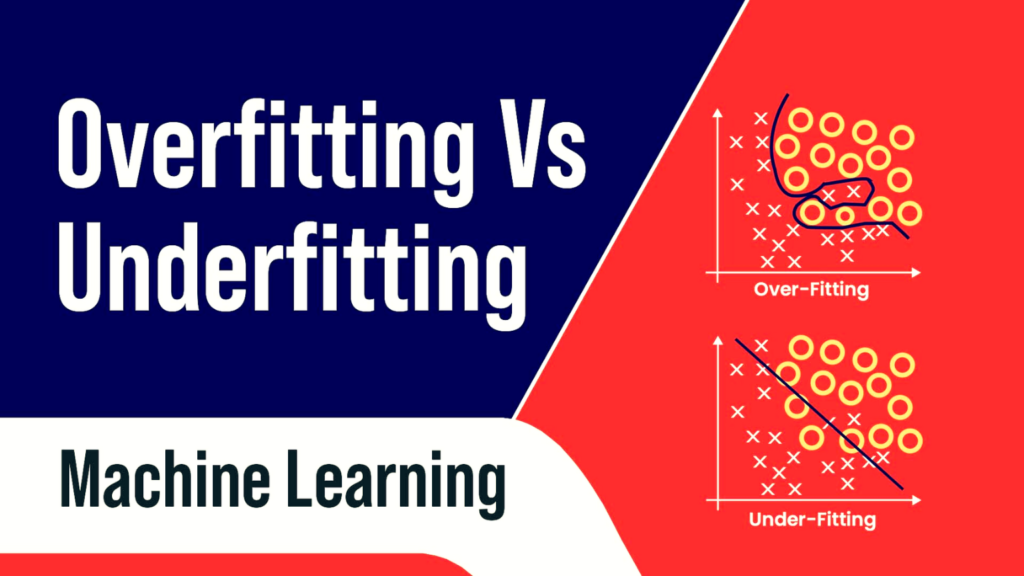

Overfitting vs Underfitting: The Battle of Complexity

In essence, the battle of underfitting vs overfitting in machine learning is a trade-off between bias and variance. Simply put, it’s a trade-off between oversimplification and excessive complexity. An underfit model suffers from high bias; it consistently misses important trends. On the other hand, an overfit model has high variance. It is highly sensitive to the training data and performs poorly on unseen data.

Overfitting and Underfitting with Examples

To put the concept of overfitting vs underfitting in context, imagine trying to fit a curve to a set of data points.

- In the case of overfitting, the model tries to pass through every single data point, creating a highly complex curve that follows every outlier and noise. It is like trying to reach your destination by following every twist and turn of the road. Whereas even a straight path would serve the purpose.

- In the case of underfitting, the model fails to capture the overall trend of the data. This creates a simplistic curve that does not follow the data points closely. This is like trying to reach your destination by following a straight line. In doing so, it ignores the actual path of the road.

The goal of an ideal model is to find a balance. It should be complex enough to capture the trend, yet not too complex to follow every noise or outlier. After all, the goal of machine learning is not to memorize training data but to generalize well to unseen data.

Conclusion

Overfitting and underfitting are significant challenges in machine learning. By understanding these concepts, one can better design and tune models to avoid these pitfalls. Measures such as cross-validation, regularization, and ensemble methods can help strike a balance between complexity and simplicity. The goal should be to optimize the model’s ability to learn from the past and predict the future.