Machine learning algorithms are becoming increasingly popular in various fields, from finance to healthcare. However, one of the biggest challenges in machine learning is preventing overfitting, which occurs when a model is too complex and fits the training data too closely, resulting in poor performance on new, unseen data. In this article, we will explore the importance of training data and validation data in preventing overfitting, and discuss a new algorithm proposed by Andrew Y. Ng to overcome this issue.

Training Data vs Validation Data

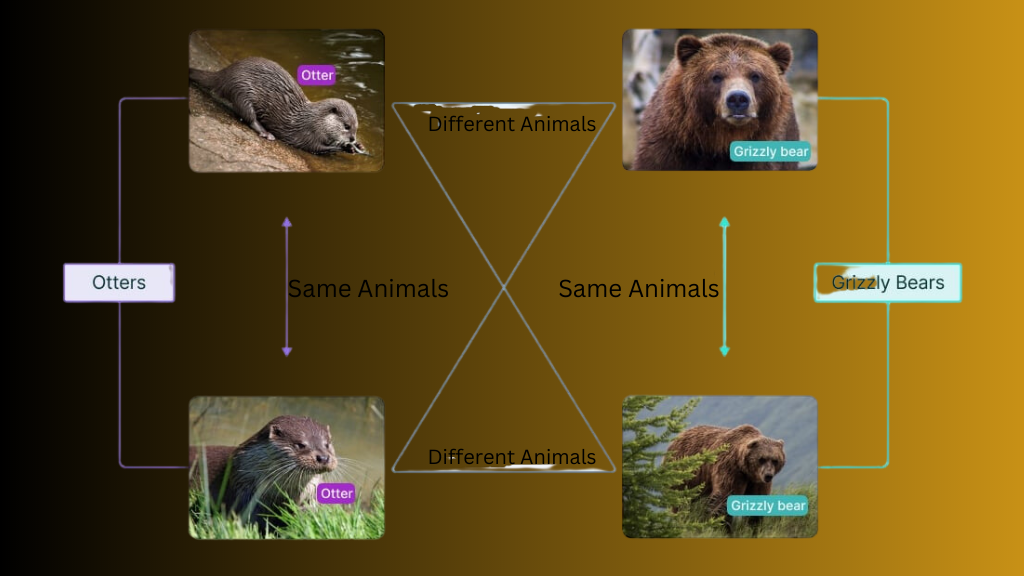

In machine learning, the goal is to create a model that can accurately predict outcomes based on input data. To do this, we need to train the model on a set of data, known as the training data. The model learns from the training data and adjusts its parameters to minimize the error between the predicted outcomes and the actual outcomes.

However, simply training the model on the training data is not enough. We also need to evaluate the model’s performance on new, unseen data, known as the validation data. This is important because the model may have learned to fit the training data too closely, resulting in poor performance on new data. By evaluating the model on the validation data, we can get an estimate of how well the model will perform on new data.

CES 2023 Robotics Innovation Awards | Best New Robot Ventures

Overfitting and Underfitting

One of the biggest challenges in machine learning is finding the right balance between overfitting and underfitting.

Overfitting occurs when the model is too complex and fits the training data too closely, resulting in poor performance on new data.

Underfitting occurs when the model is too simple and fails to capture the underlying patterns in the data, resulting in poor performance on both the training data and new data. To prevent overfitting, we need to find the right balance between model complexity and model performance.

This is where the validation data comes in. By evaluating the model on the validation data, we can get an estimate of how well the model will perform on new data. If the model performs well on the validation data, but poorly on the training data, it may be overfitting. If the model performs poorly on both the training data and the validation data, it may be underfitting.

CES 2023 | When will robots take over the world?

Preventing Overfitting with LOOCVCV

In his paper, “Preventing Overfitting of Cross-Validation Data,” Andrew Y. Ng proposes a new algorithm, LOOCVCV, to prevent overfitting of cross-validation data. The algorithm involves selecting the hypothesis with the lowest cross-validation error, but with a twist.

Instead of selecting the hypothesis with the lowest cross-validation error across all the training data, LOOCVCV selects the hypothesis with the lowest cross-validation error across all but one data point.

This process is repeated for each data point, resulting in a set of hypotheses, each trained on all but one data point. The final hypothesis is then selected based on the average performance across all the hypotheses. This approach helps to prevent overfitting by ensuring that the model is not too closely fitted to any one data point. Experimental results show that LOOCVCV consistently outperforms selecting the hypothesis with the lowest cross-validation error.

Limitations and Potential Drawbacks

While LOOCVCV is a promising algorithm for preventing overfitting, it is not without its limitations and potential drawbacks.

One limitation is that it may be computationally expensive, especially for large datasets. Another limitation is that it may not work well for datasets with a small number of data points, as there may not be enough data to train multiple hypotheses. Additionally, LOOCVCV may not be suitable for all types of machine learning problems.

For example, it may not work well for problems where the input data is highly correlated, as removing one data point may not significantly change the model’s performance.

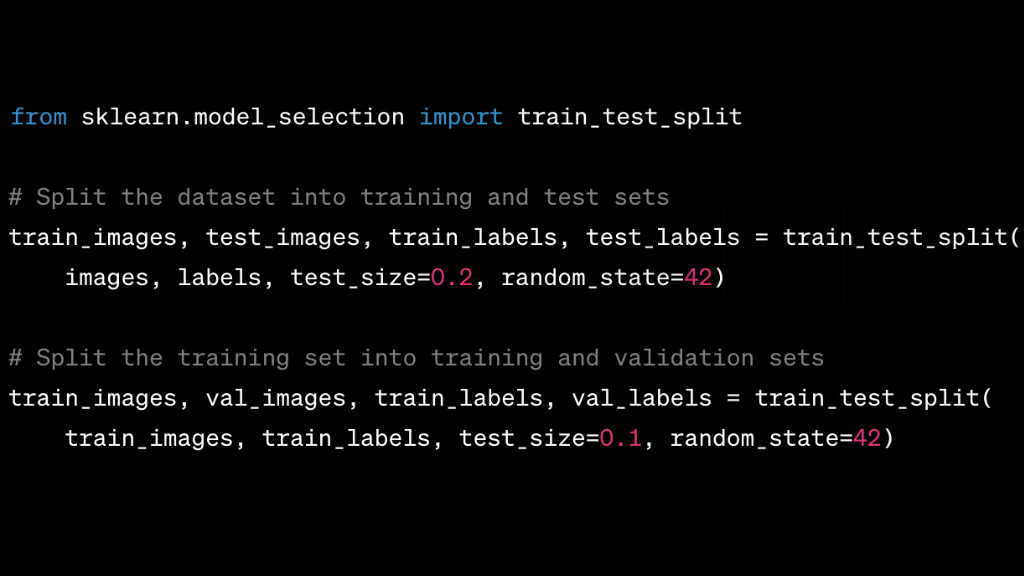

Balancing Act: Train, Validation, Test Split Ratio

The train, validation, and test split ratio is crucial. While there’s no hard and fast rule, a common split ratio is 70:15:15. That is, 70% of the data is used for training, and the remaining 30% is evenly split between validation and testing. However, these proportions might vary depending on the specifics of your dataset. Also, the proportions might change depending on the problem you’re trying to solve.

The Bottom Line

Preventing overfitting is a crucial step in machine learning, and requires finding the right balance between model complexity and model performance. By using both training data and validation data, we can evaluate the model’s performance on new, unseen data, and prevent overfitting. Andrew Y. Ng’s LOOCVCV algorithm is a promising approach to preventing the overfitting of cross-validation data and has shown consistent improvements over traditional methods. However, it is important to consider the limitations and potential drawbacks of the algorithm and to choose the approach that best fits the specific machine-learning problem at hand.

Are validation and training data the same?

Both validation and training data are used during the process of creating a machine learning model. However, they serve different functions. Training data is used for the model to learn, while validation data is used to tune the model’s parameters. Validation data ensures that the model is generalizing well beyond the training data. Hence, they are not the same.

What is the purpose of using a validation set?

The primary purpose of a validation set is to prevent overfitting during the training phase. It provides a platform to validate the model’s performance and make necessary adjustments. This helps ensure the model doesn’t simply memorize the training data, but is capable of generalizing well to unseen data.

What is the difference between validation and testing in machine learning?

Validation and testing in machine learning have different objectives. Validation data is used during the training phase to adjust the model’s parameters and control overfitting. Whereas, testing data is used after the model has been trained and fine-tuned to provide an unbiased evaluation.