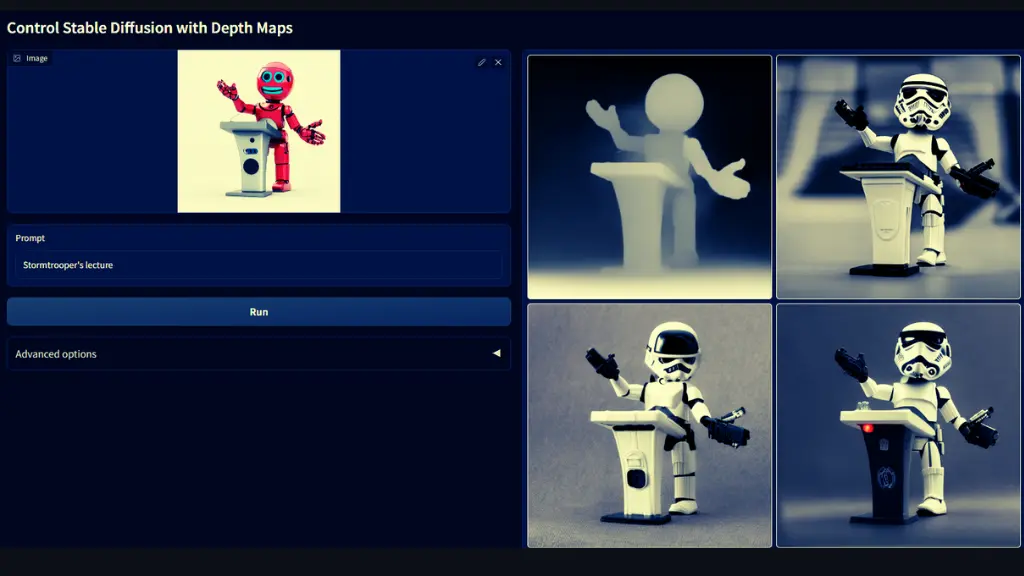

If you’re interested in image synthesis, you may have heard of ControlNet. This innovative technology allows for the creation of high-quality images with greater control and precision than ever before. In this article, we’ll explore what ControlNet is, how it works, and why it’s such an exciting development in the field of image synthesis.

When AI Attracts Attention: Dall E Mini Too Much Traffic

What is ControlNet?

ControlNet is an implementation of Adding Conditional Control to Text-to-Image Diffusion Models. This technology allows for the creation of high-quality images by using text descriptions as input. The system is designed to generate images that match the input text as closely as possible while also allowing for a high degree of control over the final output.

How does ControlNet work?

ControlNet works by using a diffusion model to generate images. The diffusion model is a type of generative model that uses a series of transformations to generate images. These transformations are applied in a random order, which allows for a high degree of variation in the final output.

Diffusion Model

The diffusion model is a type of generative model that uses a series of transformations to generate images. These transformations are applied in a random order, which allows for a high degree of variation in the final output. In ControlNet, the diffusion model is conditioned on the text descriptions, which means that the system generates images that match the input text as closely as possible.

Composable Conditions

One of the key features of ControlNet is its ability to provide fine-grained control over the generated images. This is achieved by using a technique called “composable conditions.” Composable conditions are a set of binary variables that control different aspects of the generated images. For example, one condition might control the color of the generated image, while another condition might control the shape of the objects in the image.

Diffusion-Based Data Augmentation

Another important feature of ControlNet is its ability to prevent distortion when training with small datasets. This is achieved by using a technique called “diffusion-based data augmentation.” Diffusion-based data augmentation involves adding noise to the input text descriptions, which helps to prevent overfitting and improve the quality of the generated images.

Why is ControlNet important?

ControlNet is an important development in the field of image synthesis because it allows for a high degree of control over the final output. This is particularly useful in applications where precise control over the generated images is important, such as in the creation of medical images or in the design of products.

ControlNet also has the potential to be used in a wide range of applications, from video game design to virtual reality. By allowing for the creation of high-quality images with greater control and precision, ControlNet has the potential to revolutionize the way we create and interact with digital media.

ControlNet has several practical applications. For example, it can be used in the design of products, such as furniture or clothing. By allowing designers to generate images of their products before they are manufactured, ControlNet can help to reduce the time and cost of the design process.

ControlNet also has the potential to be used in video game design and virtual reality. By allowing for the creation of high-quality images with greater control and precision, ControlNet can help to create more immersive and realistic virtual environments.

Difference Between Statistics and Machine Learning

Conclusion

ControlNet is an exciting development in the field of image synthesis. Its technical features, such as composable conditions and diffusion-based data augmentation, make it a powerful tool for generating high-quality images with fine-grained control. Its practical applications in product design, medical imaging, and virtual reality make it a versatile technology with the potential to transform a wide range of industries. As researchers continue to explore the possibilities of ControlNet, we can expect to see even more innovative applications in the future.