Object detection is a crucial task in computer vision that involves identifying and localizing objects within an image or video. Over the years, there has been a significant increase in research on object detection techniques such as object classification, counting of objects, and object monitoring. In this article, we will focus on the state-of-the-art object detection with YOLO (You Only Look Once), and how it has revolutionized object detection.

Electric Vehicles Vs Fuel Vehicles: A Comparative Study

Object Detection

Object detection is a fundamental task in computer vision that involves identifying and localizing objects within an image or video. It has gained much attention over the years due to its numerous applications in various fields, such as autonomous driving, surveillance, and robotics.

Object detection involves two main tasks: object localization and object classification. Object localization involves identifying the location of an object within an image, while object classification involves identifying the type of object.

Traditional Object Detection Techniques

Traditional object detection techniques involve a two-stage process that includes region proposal and object classification. The region proposal generates a set of candidate regions that may contain objects, while the object classification stage classifies the objects within the candidate regions. These techniques are computationally expensive and require a lot of memory, making them unsuitable for real-time applications.

State-of-the-Art Object Detection with YOLO

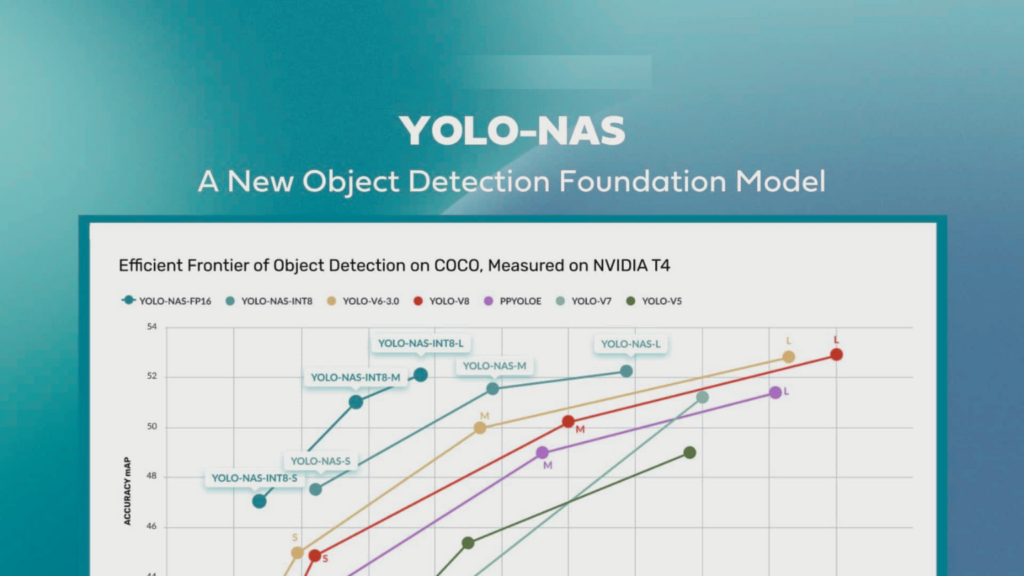

YOLO-NAS is a next-generation object detection model generated by Deci’s Neural Architecture Search Technology, AutoNAC™. It delivers state-of-the-art (SOTA) performance with unparalleled accuracy-speed performance, outperforming other models such as YOLOv5, YOLOv6, YOLOv7, and YOLOv8.

State-of-the-art object detection techniques have been developed to overcome the limitations of traditional techniques. One such technique is You Only Look Once (YOLO), which is a real-time object detection system that can detect objects in an image or video in a single pass. YOLO uses a single neural network to predict the bounding boxes and class probabilities for the objects within an image. This makes it faster and more accurate than traditional techniques.

Superior Real-Time Object Detection Capabilities

YOLO-NAS provides superior real-time object detection capabilities and production-ready performance. It is a game-changer in the world of object detection, providing AI teams with tools to remove development barriers and attain efficient inference performance more quickly.

Proprietary Neural Architecture Search Technology

Deci’s proprietary Neural Architecture Search technology, AutoNAC™, generated the YOLO-NAS model. AutoNAC™ is a powerful tool that automates the process of designing neural network architectures. It uses a combination of reinforcement learning and evolutionary algorithms to search for the optimal architecture for a given task. This approach allows for the creation of highly efficient and effective models that outperform traditional hand-designed models.

Open-source license and Pre-Trained Weights

The YOLO-NAS model is available under an open-source license with pre-trained weights available for non-commercial use on SuperGradients, Deci’s PyTorch-based, open-source, computer vision training library. With SuperGradients, users can train models from scratch or fine-tune existing ones, leveraging advanced built-in training techniques like Distributed Data-Parallel, Exponential Moving Average, Automatic mixed precision, and Quantization Aware Training.

Potential Use Cases

YOLO-NAS has potential use cases in various industries, including retail and security. In retail, it can be used for object detection in inventory management, product placement, and customer behavior analysis. In security, it can be used for surveillance, crowd monitoring, and facial recognition.

YOLO Architecture

The YOLO architecture consists of a convolutional neural network (CNN) that takes an input image and outputs a set of bounding boxes and class probabilities for the objects within the image. The CNN is divided into two parts: the feature extraction part and the detection part. The feature extraction part is responsible for extracting features from the input image, while the detection part is responsible for predicting the bounding boxes and class probabilities for the objects within the image.

HDFS vs S3: Understanding the Differences, Advantages, and Use Cases

Harnessing YOLO: From Setup to Real-time Object Detection

Installing Darknet (YOLO framework)

git clone https://github.com/AlexeyAB/darknet

cd darknet

makeDownloading Pre-trained Weights

To quickly start detecting objects on images, you can download YOLO’s pre-trained weights.

wget https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v3_optimal/yolov4.weightsObject Detection on an Image:

Once you’ve got the weights, use the following to detect objects on an image:

./darknet detector test cfg/coco.data cfg/yolov4.cfg yolov4.weights data/dog.jpgDisplaying Detection Results

After running the detection, an image named ‘predictions.jpg’ will be saved. You can view this using any image viewer or in Python as:

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

img = mpimg.imread('predictions.jpg')

imgplot = plt.imshow(img)

plt.show()Training YOLO on Custom Data

To train YOLO on custom data, you need to have your data in YOLO format and modify the configuration files accordingly. Here’s a simple training command:

./darknet detector train cfg/custom.data cfg/yolov4-custom.cfg yolov4.conv.137Real-time Detection on a Webcam

You can also use YOLO for real-time object detection on a webcam feed:

./darknet detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights -c 0Note: The -c 0 argument specifies that the first camera device should be used.

Tuning Detection Threshold

If you’re getting too many false positives or missing objects, you might want to adjust the detection threshold:

./darknet detector test cfg/coco.data cfg/yolov4.cfg yolov4.weights data/dog.jpg -thresh 0.5YOLO Training

Training YOLO involves feeding the CNN with a large dataset of labeled images. The CNN learns to predict the bounding boxes and class probabilities for the objects within the images by minimizing the loss function. The loss function measures the difference between the predicted bounding boxes and class probabilities and the ground truth bounding boxes and class probabilities.

YOLO Performance

YOLO has been shown to outperform traditional object detection techniques in terms of speed and accuracy. YOLO can detect objects in real-time, making it suitable for applications that require fast object detection. YOLO also has a high accuracy rate, with an average precision of 63.4% on the PASCAL VOC 2012 dataset.

YOLO Variants

Several variants of YOLO have been developed to improve its performance. YOLOv2, for example, uses a more complex network architecture and data augmentation techniques to improve its accuracy. YOLOv3 uses a feature pyramid network to detect objects at different scales, while YOLOv4 uses a more efficient backbone network and data augmentation techniques to improve its accuracy.

Challenges and Future Directions

Despite its success, YOLO still faces several challenges. One of the main challenges is detecting small objects, as YOLO struggles to detect objects that are smaller than the grid size. Another challenge is detecting objects in cluttered scenes, as YOLO may detect multiple objects within a single bounding box. Future research directions for YOLO include improving its ability to detect small objects and developing more efficient network architectures.

Conclusion

YOLO is a state-of-the-art object detection technique that has revolutionized object detection. Its real-time performance and high accuracy make it suitable for a wide range of applications. YOLO has several variants that have improved its performance, and future research directions aim to address its remaining challenges. As object detection continues to play a crucial role in computer vision, YOLO is likely to remain a key technique in the field.

What is YOLO, and how does it work?

How is YOLO trained?

What are the variants of YOLO, and how do they differ?

YOLOv2 uses a more complex network architecture and data augmentation techniques to improve its accuracy.

YOLOv3 uses a feature pyramid network to detect objects at different scales.

YOLOv4 uses a more efficient backbone network and data augmentation techniques to improve its accuracy.